®

®Archive /

With the race to deliver fully operational 5G production networks upon us, the popularity of Edge AI and Edge Computing will grow in tandem. Currently, it is expected that 750 million Edge AI chips for mobile devices will be sold in 2020 alone. And with 5G networks promising to deliver higher data transfer rates more and more computation will be performed near the 'edge' or on the user's mobile device.

Why is this important? Well it can ensure a higher level of privacy to the user. Currently, when your personal data is uploaded to the public or private clouds many services do not fully encrypt your data 100% of the time either due to technical or business constraints. However, with the 5G network providing faster data transfer rates your data can reside in the cloud fully encrypted 100% of the time. Then when you need to access it, your mobile device can make a secure request to the cloud to download particular buckets of encrypted data in order to decrypt and run computations or AI models against it in order to return back a result to the user.

Another benefit with the intersection of 5G and Edge AI technologies is that it can improve the workflow of an organization. With faster data transfer rates more devices on the edge can react more quickly and provide immediate results without a central authority approving the task. In other words, voice assistants get smarter, photography and video shooting get more sophisticated, cars get safer, data security gets better, and robotics — both consumer and industrial — take innovative leaps. And health care outcomes improve dramatically!

The very first component of AI starts with data. Investment in the collection of current data sources and the creation of new sources will provide the necessary structure for which AI algorithms will be able to train with.

Protection and preservation of data is another necessary component of AI. In order to protect and preserve the integrity of the data, developers require trusted, responsible, inclusive legal and regulatory guidance that provide the architecture for governance. In Europe, the General Data Protection Regulation (GDPR) would be called out as an example of what is needed. In May 2018, the GDPR went live to ensure that the health care industry handles individual information responsibly.

Government agencies are needed to help solve some of these issues. Partnerships with academia, non- for-profit, and commercial entities will ensure everyone has a voice at the table.

The next component for AI includes training. People that require training in the field of AI include computer scientists, data scientists, medical professionals, legal professionals and policy makers that bring unique skills to the table and can evaluate the risks.

Finally, diversity is needed within AI development. AI will perform better if diversity is all inclusive. We have to learn to ignore the past inhibitions and build algorithms based on the underlying data itself. Historical topics that set us apart including race, gender, culture, social economic status will have to be leveled to truly find and realize AI’s potential.

Businesses are asked to use AI to drive growth, improve customer experiences, manage risk and compliance and improve product delivery. Amid industry progressions, organizations are investing in transforming how they operate with AI and machine intelligence — and they expect results. So how can you drive higher returns on your AI investments? To answer this question, business must address the three challenges facing AI Implementation before stakeholder acceptance:

Data Availability and Quality

Most enterprises recognize that unlocking their data with machine learning is the most crucial driver of business competitiveness. Data must be available, assessible, and validated.

User acceptance

How do you overcome the myriad challenges to deliver an AI solution that genuinely drives business impact? And how do you implement these solutions repeatedly and govern them at scale? Stakeholder acceptance of AI will facilitate implementation. And it will be based on quick and steady results that continually build upon each other. Once management sees value, continued expansion of AI into all facets of the business enterprise can occur.

Complex and Lengthy Projects

Management is inherently impatient. They want results quick and often. Rather than trying to eat the whole elephant at once, select projects that can be accomplished quickly and provide value to the company can continually build upon those early successes!

Artificial Intelligence provides knowledge using algorithms to interpret large data sets. This advanced knowledge can be used to make better business decisions. These decisions are not “perfect”, but provide a deeper insight supported with advanced analytics to give the enterprise the tools needed to stay ahead of the curve.

Staying ahead of your competitors is critical in business. Access to data and insights is key in estimating efforts, making smart decisions and staying ahead of markets’ and customers' every-changing demands. AI-based ventures are considered to be a strategic priority by 83 percent of businesses according to Boston Consulting Group. Marketing of AI tools is poised to top $97B in 2023 per International Data Corporation (IDC) AI Systems Spending Guide. Unfortunately, less than 25% of these global businesses have started the transformation journey.

However, the busineses that do leverage AI and machine learning solutions are able to change and improve their day-to-day operations. For example, smart technology solutions are now enabling health care providers to quickly scan large medical charts and test results for anomalies and patterns, helping pioneer the next wave of prevention treatment and procedures. AI also empowers insurance companies, hospitals, and physicians to reduce time and cost required to diagnose patients and guide them toward optimal care and treatment.

Disruptive forces in the form of Artificial Intelligence (AI) has the potential to create revolutionary change within health care. New paradigms in the not too distant future will shift from the focus on disease (reactionary) to focus on how we stay healthy (proactive).

The scenario of the future may pattern itself like this. After birth, all humans would get a baseline, multi-faceted profile that would include screening for all the common genetic and rare diseases. Over the course of your life, cost-effective, clinical approved devices could provide precision and accuracy on a wide range of bio-metrics that may include blood pressure, heart rate, temperature and blood testing as well as scan for environmentally detrimental factors including exposure to virus and toxins as well as including the impact of behavioral factors such as sleep and drug or other chemical use.

The data collected from the population could be used to create new AI models for health care. This is not a pie-in-the-sky idea, but rather a incremental approach on what could evolve over the next few years.

The benefit of this approach could reap huge rewards, such as predictive analysis for heart attack, stroke, and cancer and lead to earlier diagnoses and preventative treatments.

Delivery of health care may take on the form of intelligent bots what would be integrated within the IT infrastructure of the house through digital assistants or smartphones to triage symptoms, educate patients, and ensure the medical regiments are adhered to by the patients.

After reading Top Trends on the Gartner Hype Cycle for Artificial Intelligence, 2019 article that surveyed organizations whom have deployed artificial intelligence (AI) systems it was clear that SkinScreen fell within two of the categories, Augmented Intelligence and Edge AI, that were identified. Augmented intelligence is a human-centered partnership model of people and artificial intelligence (AI) working together to enhance cognitive performance, including learning, decision making, and new experiences. Edge AI refers to the use of AI techniques embedded in Internet of Things (IoT) endpoints, gateways, and edge devices, in applications ranging from autonomous vehicles to streaming analytics. At SkinScreen we believe these two areas within AI will continue to expand as more sophisticated mobile, end devices that can better support the execution of AI models saturate the market.

The future of ‘standard’ medical practice might be here sooner than anticipated, where a patient could see a computer before seeing a doctor. Through advances in artificial intelligence (AI), it appears possible for the days of misdiagnosis and treating disease symptoms rather than their root cause to move behind us.

The accumulating data generated in clinics and stored in electronic medical records through common tests and medical imaging allows for more applications of artificial intelligence and high performance data-driven medicine. These applications have changed and will continue to change the way both doctors and researchers approach clinical problem-solving.

However, while some algorithms can compete with and sometimes outperform clinicians in a variety of tasks, they have yet to be fully integrated into day-to-day medical practice. Why? Even though these algorithms can meaningfully impact medicine and bolster the power of medical interventions, there are numerous regulatory concerns that need to be addressed first for further integration within the medical field.

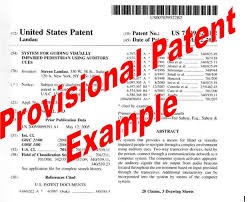

SkinScreen® is now considered "patent pending" with the USPTO as of 16 October 2019 (Provisional Patent Application No. 62/915,826). Our intellectual property rights continue to get stronger with this step forward. We are preparing our non-provisional patent application at this time to meet the USPTO timeline to secure our patent within the United States.

Many companies strive to get a competitive advantage in the market place. They are reaching to grab data-driven approaches to business decisions rather than using the traditional “intuition” approach. Can algorithms now provide enough insight into making decisions when success or failure involves very large amounts of business capital?

At issue right now is that in many cases there is a severe data shortfall in decision making which prevents companies from training algorithms effectively. However, it appears that it is only a matter of time that AI will take on more strategic roles within the strategic role of assisting board members by providing insight on future opportunities, which Research and Development projects to pursue, and which geographical markets to enter.

We however don’t want to come to a scenario in the future, where humans just follow algorithms blindly, and effectively eliminate choice. It is still critical for managers to be conversant on understanding the basis on which an AI system functions.

In order for companies to execute a decision, buy-in is needed at all levels within a company. Otherwise the only outcome will be a probability produced by some AI model. If a manager can’t explain the model, the rationale that it produces will always be suspect.

While technology presents some challenges, it will be a useful tool to support dermatologists’ diagnoses. The same technology that suggests friends for you to tag in photos on social media could provide an exciting new way to help dermatologists diagnose skin cancer. While artificial intelligence systems for skin cancer detection have shown promise in research settings, however, there is still a lot of work to be done before the technology is appropriate for real-world use.

One murky area is the skin cancer “scores” that AI algorithms assign to suspicious spots. It’s not yet clear how a dermatologist would interpret those numbers. The training of AI systems presents an even larger barrier. Hundreds of thousands of photos that have been confirmed as benign or malignant and are used to teach the AI models to recognize skin cancer, but all of these images were captured in optimal conditions,— they’re not just any old photos snapped with a smartphone.

Just because the computer can read these validated data sets with near 100 percent accuracy doesn’t mean they can read any image, Individuals have a different phone, lighting, background and pixelation quality. Another challenge resides in the datasets used to train the algorithms. The images used so far in training AI systems are almost exclusively of light-skinned patients.

The algorithm is only as good as what you’ve taught it to do. For example, if you’ve not taught the AI models to diagnose melanoma in different skin colors, then you’re at risk of not being able to do it when the algorithm is complete. AI will never get to the point of being 100 percent accurate in skin cancer detection, but dermatologists can help shape the technology in its early stages so patients can get the best care possible.

The U.S. Patent and Trademark Office (USPTO) has just approved the SkinScreen trademark for official use. This means after an official search of the USPTO database and publication of the trademark there was found to be no conflicting usages of the SkinScreen trademark against already registered trademarks or other entities. As a result, we can now apply a ® to our trademark to indicate it has been officially approved and registered by the USPTO.

The SkinScreen Mobile Apps for iOS and Android platforms are nearly complete. These mobile apps will allow you take photos through your phone or use existing photos in your photo library in determining whether a skin lesion is present in the image. And this calculation will be performed completely on your smart device, if it is capable, to ensure the 1) highest level privacy and 2) it is untethered from the network. Our anticipated release date will be November 2019. Stay tuned!

Automated classifiers may be better than physicians when it comes to diagnosing pigmented skin lesions, but human supervision is still needed, researchers found.

All machine-learning algorithms reached a mean of 2.01 more correct diagnoses than did all human readers (17.91 vs 19.92; P<0.0001), reported Harald Kittler, MD, of the Medical University of Vienna in Austria, and colleagues in The Lancet Oncology.

When comparing the top three machine learning algorithms with 27 human experts with over a decade of experience, the algorithms still outperformed the experts (18.78 vs 25.43; P<0.0001), the investigators found.

Automated classifiers may be better than physicians when it comes to diagnosing pigmented skin lesions, but human supervision is still needed, researchers found.

All machine-learning algorithms reached a mean of 2.01 more correct diagnoses than did all human readers (17.91 vs 19.92; P<0.0001), reported Harald Kittler, MD, of the Medical University of Vienna in Austria, and colleagues in The Lancet Oncology.

When comparing the top three machine learning algorithms with 27 human experts with over a decade of experience, the algorithms still outperformed the experts (18.78 vs 25.43; P<0.0001), the investigators found.

Notably, the difference between the top three algorithms and experts was significantly lower for images that were gathered from centers that did not contribute images for the training set when compared with other image sets, although there was human under- performance once again (11.4% vs 3.6%; P<0.0001), the researchers wrote.

In this study, machine-learning classifiers performed better than experienced human readers in the diagnosis of pigmented skin lesions, suggesting that machine learning should have a more important role in clinical practice, the investigators said.

These findings "could improve the accuracy of the diagnosis of pigmented skin lesions in areas where specialist dermatological service is not readily available, and might accelerate the acceptance and implementation of automated diagnostic devices in the field of skin cancer diagnosis," Kittler's group wrote.

This study falls in line with prior research showing that machines are good at detecting concerning lesions, noted Jamie Altman, MD, of Aesthetic Dermatology Associates in Media, Pennsylvania, who was not involved in the study. "Some of the other [studies] have not shown [machine-learning] to be superior to humans, whereas this one did show that they performed slightly better on looking at dermatoscopic images specifically," Altman told MedPage Today.

While the topic has never been timelier, academic psychology has studied computer algorithms’ ability to outperform subjective human judgments since the 1950s. The field known as “clinical vs. statistical prediction” was ushered in by psychologist Paul Meehl, who published a “disturbing little book” (as he later called it) documenting 20 studies that compared the predictions of well-informed human experts with those of simple predictive algorithms.

The studies ranged from predicting how well a schizophrenic patient would respond to electroshock therapy to how likely a student was to succeed at college. Meehl’s study found that in each of the 20 cases, human experts were outperformed by simple algorithms based on observed data such as past test scores and records of past treatment. Subsequent research has decisively confirmed Meehl’s findings: More than 200 studies have compared expert and algorithmic prediction, with statistical algorithms nearly always outperforming unaided human judgment.

In the few cases in which algorithms didn’t outperform experts, the results were usually a tie . The cognitive scientists Richard Nisbett and Lee Ross are forthright in their assessment: “Human judges are not merely worse than optimal regression equations; they are worse than almost any regression equation.”

Subsequent research summarized by Daniel Kahneman in Thinking, Fast and Slow helps explain these surprising findings. Kahneman’s title alludes to the “dual process” theory of human reasoning, in which distinct cognitive systems underpin human judgment. System 1 (“thinking fast”) is automatic and low-effort, tending to favor narratively coherent stories over careful assessments of evidence. System 2 (“thinking slow”) is deliberate, effortful, and focused on logically and statistically coherent analysis of evidence. Most of our mental operations are System 1 in nature, and this generally serves us well, since each of us makes hundreds of daily decisions. Relying purely on time- and energy-consuming System 2-style deliberation would produce decision paralysis. But—and this is the non-obvious finding resulting from the work of Kahneman, Amos Tversky, and their followers—System 1 thinking turns out to be terrible at statistics.

Artificial intelligence is highly dependent on data quality. Quality data is the most important feature that has enabled AI to become successful!

Science with data

SkinScreen LLC. emphasizes sound data science and data validity across our entire analytics practice. It is a tenant for our success! We have more data scientists focused on cognitive, AI, and machine learning projects than most large corporations. The better the data, the more accuracy and precision can be achieved within the field of AI.

The trend is the increasing ubiquity of data-driven decision making and artificial intelligence applications. Once again, an important lesson comes from behavioral science: A body of research dating back to the 1950s has established that even simple predictive models outperform human experts’ ability to make predictions and forecasts. This implies that judiciously constructed predictive models can augment human intelligence by helping humans avoid common cognitive misinterpretations and pitfalls.

Today, predictive models are routinely consulted to hire baseball players (Money Ball) and other types of employees, underwrite bank loans and insurance contracts, triage emergency-room patients, deploy public-sector case workers, identify safety violations, and evaluate movie scripts to mention a few of the benefits. More recently, the emergence of big data and the renaissance of artificial intelligence (AI) have made comparisons of human and computer capabilities considerably more fraught. The availability of web-scale datasets enables engineers and data scientists to train machine learning algorithms capable of translating texts, winning at games of skill, discerning faces in photographs, recognizing words in speech, piloting drones, and driving cars. The economic and societal implications of such developments are massive. A recent World Economic Forum report predicted that the next four years will see more than 5 million jobs lost to AI-fueled automation and robotics.

Let’s dwell on that last statement for a moment: What about the art of forecasting itself? Could one imagine computer algorithms replacing the human experts who make such forecasts? Investigating this question will shed light on both the nature of forecasting—a domain involving an interplay of data science and human judgment—and the limits of machine intelligence. There is both bad news (depending on your perspective) and good news to report. The bad news is that algorithmic forecasting has limits that machine learning-based AI methods cannot surpass; human judgment will not be automated away anytime soon. The good news is that the fields of psychology and collective intelligence are offering new methods for improving and de-biasing human judgment. Algorithms can augment human judgment but not replace it altogether; at the same time, training people to be better forecasters and pooling the judgments and fragments of partial information of smartly assembled teams of experts can yield still-better.

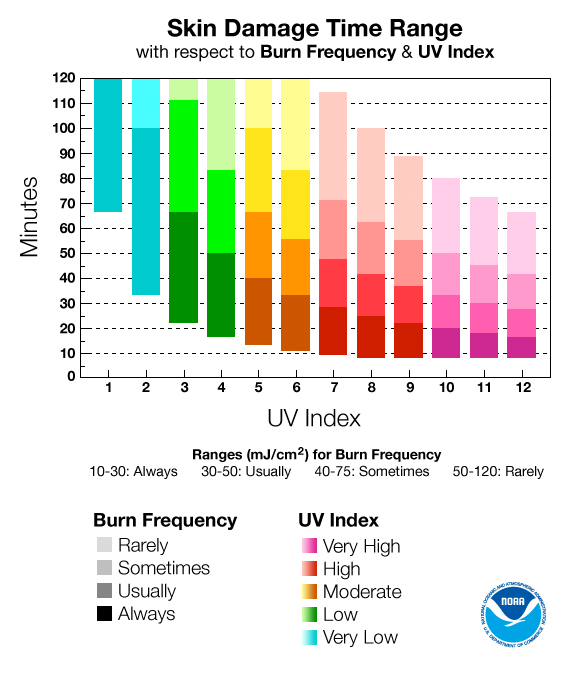

The UV Index is a forecast of the probable intensity of skin damaging ultraviolet radiation reaching the surface during the solar noon hour: 11:30-12:30 Local Standard Time, or 12:30-13:30 Local Daylight Time. The greater the UV Index is the greater the amount of skin damaging UV radiation. How much UV radiation is needed to actually damage one's skin is dependent on several factors. In general, the darker one's skin is — that is, the more melanin one has in his/her skin — the longer (or the more UV radiation) it takes to cause erythema (skin reddening).

The figure above shows a look-up chart where you may cross check your propensity to burn versus the UV Index. For those who always burn and never tan, the times to burn are relatively short compared to those who almost always tan.

SkinScreen has partnered with Amazon.com to educate and offer sun mitigation products of all types, including pet products. Theses products are focused on mitigation of the negative impact of exposure to the sun for individuals as well as animals and material.

If you don’t see a product that you think we should consider for listing, please send us the location where we can review it for inclusion.

Patent Process

SkinScreen, LLC decided early on that their Intellectual Capital was valuable to the success and growth of the company and required protection from other develpers and products.

After watching SHARK TANK for many episodes, the SkinScreen owners realized that the investors or ‘sharks’ of SHARK TANK were really looking at what intellectual property the small entity has or may possess in the near future, and what steps the small entity had taken to protect it. Having protections such as trademarks, copyright and copyright registration and patent(s) allowed the sharks to determine whether a competitive advantage was present within the small entity.

If you have a patentable invention and decided what type of patent application to file you are likely curious about what steps need to be taken to patent your invention. The two types that were under consideration are the design patent – which protects the ornamental, visual features of products and are particularly suited to prevent knockoff imitations. The second type of patent is referred to as the Utility Patent - utility patents protect SkinScreen’s invention's features and functions and can be filed as provisional or non-provisional patent applications. This article will help explain the various stages of the patent process.

SkinScreen, LLC. was more concerned about SkinScreen’s features and functions, thus the Utility patent was our choice to pursue.

The next decision that was required was to decide to file a provisional or non-provisional patent application. Upon discussion with our lawyers, and based on the current patent laws, it was recommended that the provisional patent will be pursued. The reason for this is that the United States Patent laws have adopted the “First In” method of determining ownership. That being, those through the door first – win, and can claim ownership of the intellectual property!

What is a Provisional Patent? What is the benefit of a Provisional Patent?

The forms required for a Provisional Patent are essentially the same forms required for a non-provisional patent. The difference is that the Provisional Patent normally receives a filing date and application number quicker from the Bureau of Patent and Trademarks if all the forms havebeen filled out and are error free. Thus, your intellectual property can be protected quicker based on the “First In” rules previously discussed.

The nonprovisional utility patent application would also include the corresponding transmittal forms and application data sheets. An application that meets the filing requirements is awarded a filing date and application number, which are both provided in the Filing Receipt issued by the United States Patent and Trademark Office. Once a filing date is secured, the claimed invention is officially Patent pending.

Patent pending is the term used to describe a patent application that has been filed with the patent office, but has not issued as a patent. Patent pending indicates that the inventor is pursuing protection, but the scope of protection, or whether a patent will even issue, is still undetermined. Marking an invention “patent pending” puts the public on notice that the underlying invention may be protected and that copyists should be cautious. Any applicant who has a non-expired provisional application or a pending non-provisional application can indicate that the related subject matter is “patent pending”.

The steps in the process: It was up to our Chief Scientist and Software Architect along with the CEO to review the application and confirm the invention is adequately disclosed. This was a critical stage as, once the application has been filed, the initial disclosure can no longer be amended. If it is later determined that critical information has been left out of the filing, the only meaningful chance to rectify the issue may be to file another patent application, along with another set of filing fees.

What we look forward to in the future!

Prosecuting the patent application

After the patent application has been filed, the United States Patent and Trademark Office assigns the application to a patent examiner who is responsible for reviewing the application, searching for relevant prior art and making any objections and rejections that can be made against the patent application in an office action. It is then up to the inventor and patent attorney to respond to the examiners objections and rejections to demonstrate the patent application has met all of the requirements of patentability. We expect several rounds of dialogue with the patent examiner before the application is determined to be patentable and in condition for allowance, abandoned by the applicant or the final rejections are appealed.

The prosecution process can be lengthy, often several years. Depending on the number of responses required to put the patent application in condition for allowance, the process may be expensive as well.

Allowance

When the United States Patent and Trademark Office determines a patent application is patentable, it sends a Notice of Allowability to the applicant indicating that the patent application is in condition for allowance. The Notice of Allowability may include requirements to be met before the patent issues, but the requirements are typically formalities rather than substantive matters (e.g., corrected drawings, substitute oath or declaration, etc.). In response, the applicant may correct any formalities and pay the issue fee required by the Patent Office. The issue fee for a utility patent is currently $1,510 and the issue fee for a design patent is currently $860. As with many patent-related fees, the issue fee is reduced in half for small entities.

Issuance

After receiving the issue fee payment for the allowed patent application, the Patent Office assigns a patent number and issues the patent.

The newly issued patent is published online and in the Official Gazette of the United States Patent and Trademark Office. The Official Gazette publishes weekly on Tuesday.

Maintenance

After a utility patent has been issued, the United States Patent and Trademark Office Utility requires payment of maintenance fees to maintain the patent in force. Design patents do not require any maintenance fees. The maintenance fees are due 3.5, 7.5 and 11.5 years from the date of the original patent grant. The current maintenance fees schedule is $980 at 3.5 years, $2,480 at 7.5 years and $4,110 at 11.5 years. As with many patent-related fees, the maintenance fees are reduced in half for small entities.

Let me start out by saying some things that need to be said up front:

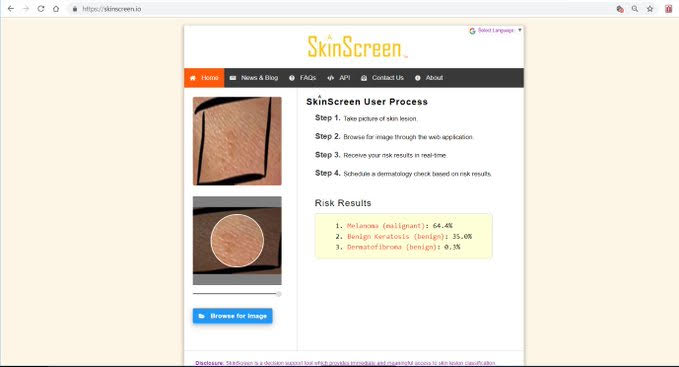

SkinScreen, LLC. has created an incredible platform for digital sales. The product SkinScreen is supported on three platform levels. They include mobile Apps, exposed API service endpoints, and a web application. All three platforms perform in the same manner and produce the same consistent results. The outlook for this product is bright! Now let me get into some details concerning SkinScreen itself.

Product Market Fit

The term “product market fit” can be confusing if you put too much into it. It’s really just what it sounds like – finding a match (fit) between your proposed product and the market you’re going after. The whole notion of testing your product market fit isn’t new – organizations ran focus groups for decades before they used the phrase.

That said, however, you think about the term, the point is the same: before you invest a lot, invest a little. Before you pursue a full court press on a marketing campaign, find ways to validate your hypotheses SkinScreen has tested the product market by allowing the Web Based platform to be used “FREE OF CHARGE’ to the user community. This has allowed valuable customer feedback to be incorporated to create a better product, shorten the development cycle as well as to develop significant interest for the tool.

Where Products Fail

Most people fail at finding a product market fit because they spend so much time creating their v1 product. What I mean is that their beta version before they roll something out to test it is too high. Their bar is too high!

They spend more money than they should (even if it’s just their effort/volunteer time). Because if you don’t have a good product market fit, it’s not worth moving forward.

According to the SkinScreen software architect, the platforms are developed through an accelerated build process that leverages the SAFe Agile development framework. As a result, the tool is constantly adding new features and adapting to any changes in the environment in a responsible manner that ensures no breakage or experienced downtime for the user.

Create Simple Products that Fill a Void

And that’s why I love products like SkinScreen. It is a simple product that serves a clear and defined function. SkinScreen founders decided to create something small and see if people would use it (as is). And I’m one of the individuals who has used the product and will continue to use the product in the future.

Why SkinScreen, LLC. Should Continue to Build Simple Products

I want to wrap up by highlighting four reasons why SkinScreen, LLC. should pursue simple products.

- People have an easier time knowing if they’re in your target market or not.

- You can grow the products by listening to customers, rather than prospects.

- You can get your product out the door faster – which often means cheaper.

- You can start collecting feedback faster – which helps you adjust your product.

There is really no reason not to build simple products and grow them from there.

And if you’re smart, and your product grows and its market clamors for more and more features – then you can do what Apple did and create an ecosystem for other applications.

Lloyd Carmack (Independent Software Evaluator/SkinScreen User)

In the near future we plan to roll out SkinScreen smart phone applications that will operate on Android and Apple iOS mobile devices. The applications are being developed currently. This will complement our existing web application and API service endpoints that third-party entities can access.

Stay tuned!!